Generate DQ Rules (AI-Powered)

Overview

The Generate DQ Rules feature is an AI-powered capability that automatically creates data quality rules from source database objects and imports them into a Data Quality Hub. This feature analyzes metadata from database tables and generates comprehensive validation rules, significantly reducing manual effort.

Key Features

- AI-Powered Rule Generation - Automatically generates intelligent DQ rules based on metadata analysis

- Multi-Source Support - Works with MySQL, PostgreSQL, SQL Server, and other supported data sources

- Automated Sync - Optional scheduling for periodic synchronization of DQ rules

- DQ Hub Integration - Seamlessly imports generated rules into Data Quality Hub

Business Value

- Reduces manual effort in creating data quality rules

- Ensures consistency in data quality monitoring

- Accelerates data governance initiatives

- Provides intelligent rule suggestions based on data patterns

Prerequisites

1. Source Data Source Configuration

Before using this feature, ensure:

- Source data source (MySQL, PostgreSQL, SQL Server, etc.) is configured and connected

- Source data source supports AI-based DQ rule generation feature

- User has appropriate permissions to access source data source

- Database tables/objects are accessible and metadata is available

2. Target DQ Hub Configuration

- Target Data Quality Hub is configured

- User has permissions to create/import rules into DQ Hub

- DQ Hub is operational and reachable

3. System Requirements

- AI services must be available and configured

- Network connectivity between services

Ensure source data source is tested and operational before attempting rule generation.

Use Cases

Use Case 1: Onboarding New Database to DQ Framework

Scenario: A new production database needs to be monitored for data quality.

Steps:

- Configure source data source connection

- Identify all critical tables in the database

- Generate DQ rules for these tables

- Enable daily scheduling for ongoing monitoring

- Review and adjust generated rules in DQ Hub

Benefits:

- Quick onboarding of new databases

- Automated rule creation saves time

- Continuous monitoring with scheduling

Use Case 2: Migration Quality Assurance

Scenario: Validating data quality during database migration.

Steps:

- Generate DQ rules from source system

- Apply same rules to target system

- Compare rule execution results between systems

- Identify discrepancies and data integrity issues

Benefits:

- Ensures data integrity during migration

- Automated validation process

- Consistent quality checks across environments

Use Case 3: Compliance Monitoring

Scenario: Ensuring regulatory compliance through data quality checks.

Steps:

- Identify compliance-critical tables (e.g., customer data, financial records)

- Generate DQ rules for these tables

- Schedule daily rule execution

- Monitor violations and configure alerts

Benefits:

- Proactive compliance monitoring

- Audit trail of data quality

- Automated alerting for violations

Use Case 4: Data Profiling and Discovery

Scenario: Understanding data patterns and quality issues in existing databases.

Steps:

- Run DQ rule generation for all tables

- Review generated rules to understand data patterns

- Identify quality issues (nulls, duplicates, format violations)

- Implement remediation strategies

Benefits:

- Data discovery and profiling

- Pattern identification

- Quality issue detection

Step-by-Step Usage Guide

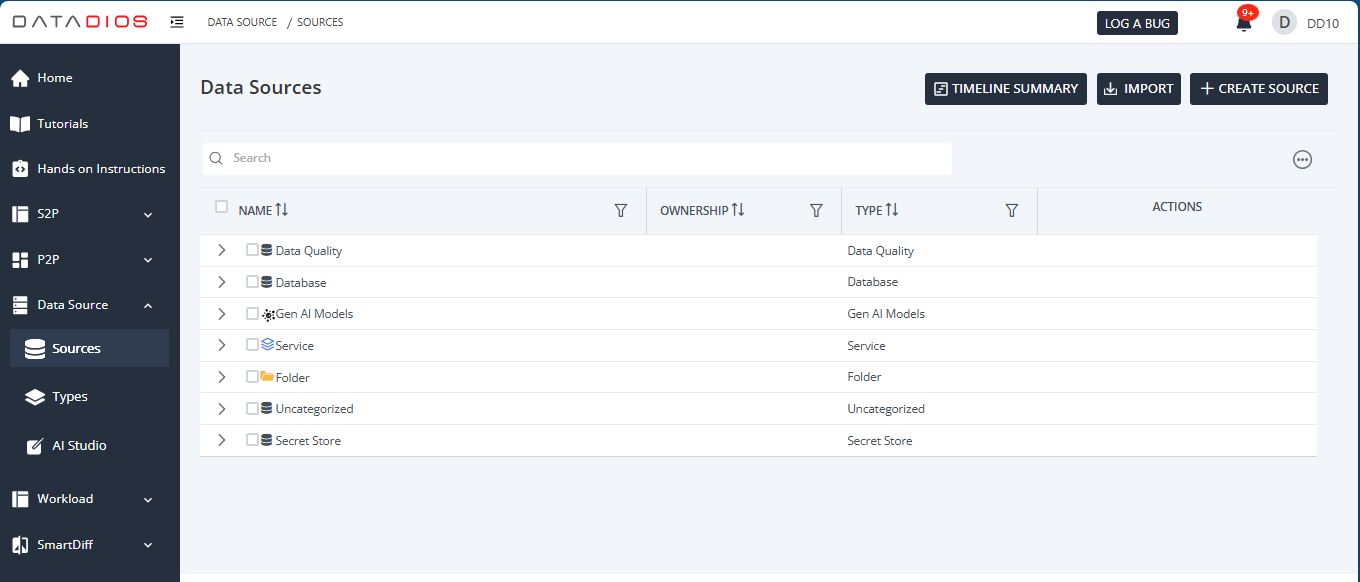

Step 1: Navigate to Data Source

- Access Data Sources

- Navigate to

Data Source → Sourcesfrom the left sidebar - Locate your source database in the data source list

- Ensure the data source is in a connected state

- Navigate to

Both source data source and target DQ Hub must be connected before proceeding.

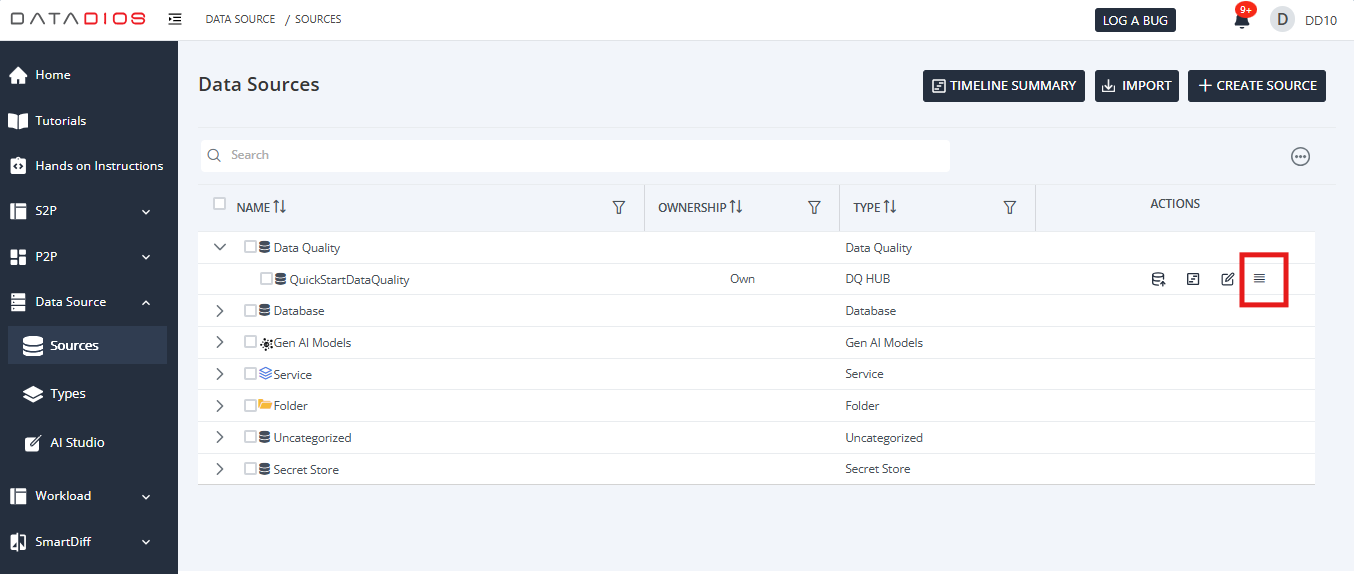

Step 2: Access Generate DQ Rules Feature

- Open Rule Generation Interface

- Click on your source data source

- Look for the Generate DQ Rules option or button

- Click to open the rule generation interface

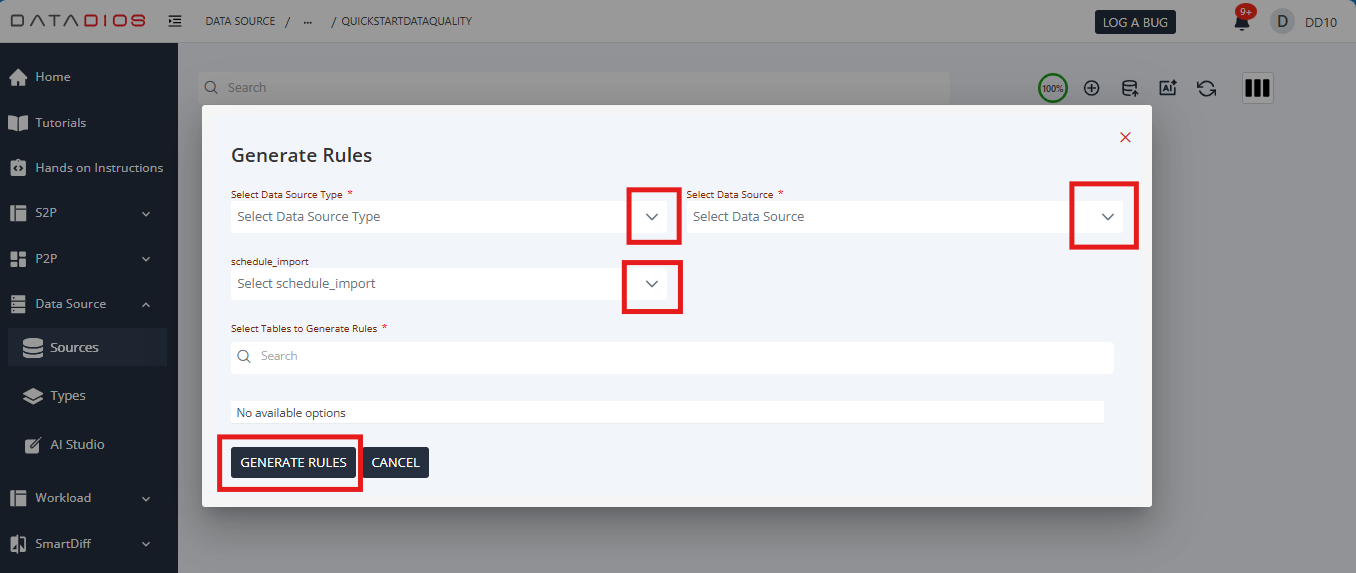

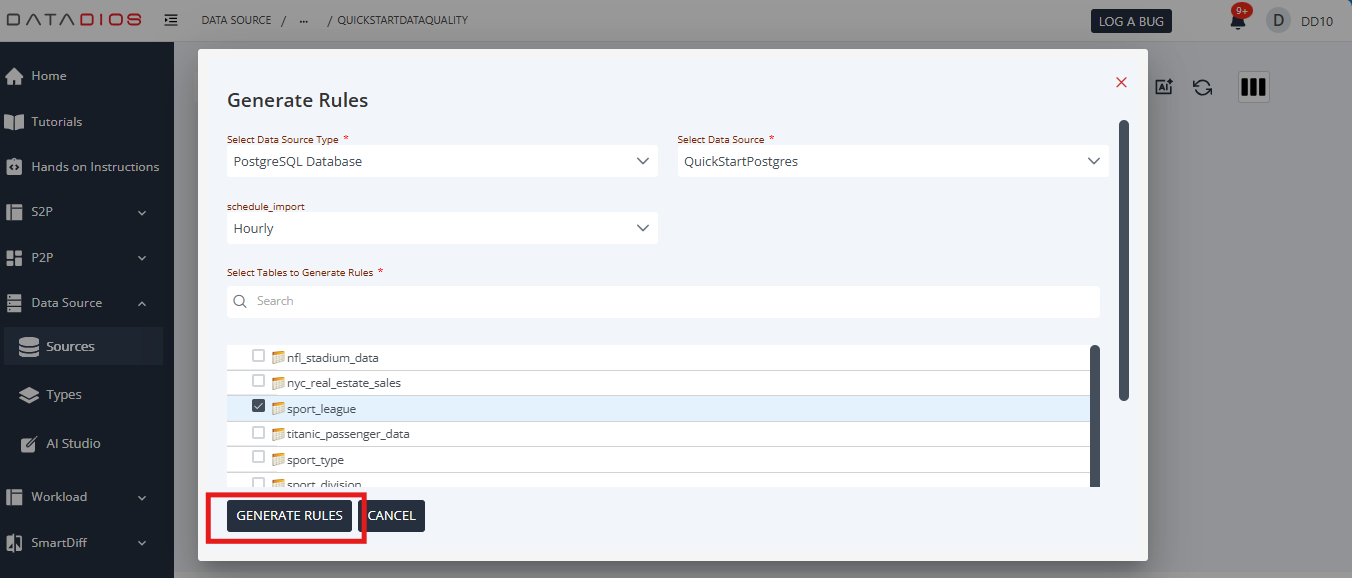

Step 3: Configure Rule Generation Settings

-

Select Source and Target

- Source Data Source: Confirm the selected source database

- Target DQ Hub: Select the destination DQ Hub for generated rules

-

Choose Database Objects

- Select schema(s) from the dropdown

- Choose specific tables for rule generation

- Consider starting with 5-10 critical tables

Selection Criteria:

- Business Criticality - Focus on high-value business data

- Compliance Requirements - Include regulatory-required tables

- Data Volume - Start with critical tables before scaling

- Data Change Frequency - Prioritize frequently updated tables

Step 4: Configure Scheduling (Optional)

- Set Up Automated Sync

- Enable Schedule Import if you want periodic rule synchronization

- Choose frequency: Daily, Weekly, or Monthly

- Select appropriate time for scheduled execution

Recommended Frequencies:

- Daily - For frequently changing transactional data

- Weekly - For moderate change frequency (e.g., analytical tables)

- Monthly - For stable master data (e.g., reference tables)

Schedule during off-peak hours to minimize system load and ensure optimal performance.

Step 5: Initiate Rule Generation

-

Review Configuration

- Verify source and target data sources

- Confirm selected tables/schemas

- Review scheduling settings (if enabled)

-

Start Generation

- Click Generate or Create button

- Monitor the progress indicator

- Wait for completion notification

Rule generation may take a few minutes depending on the number of tables and complexity of metadata.

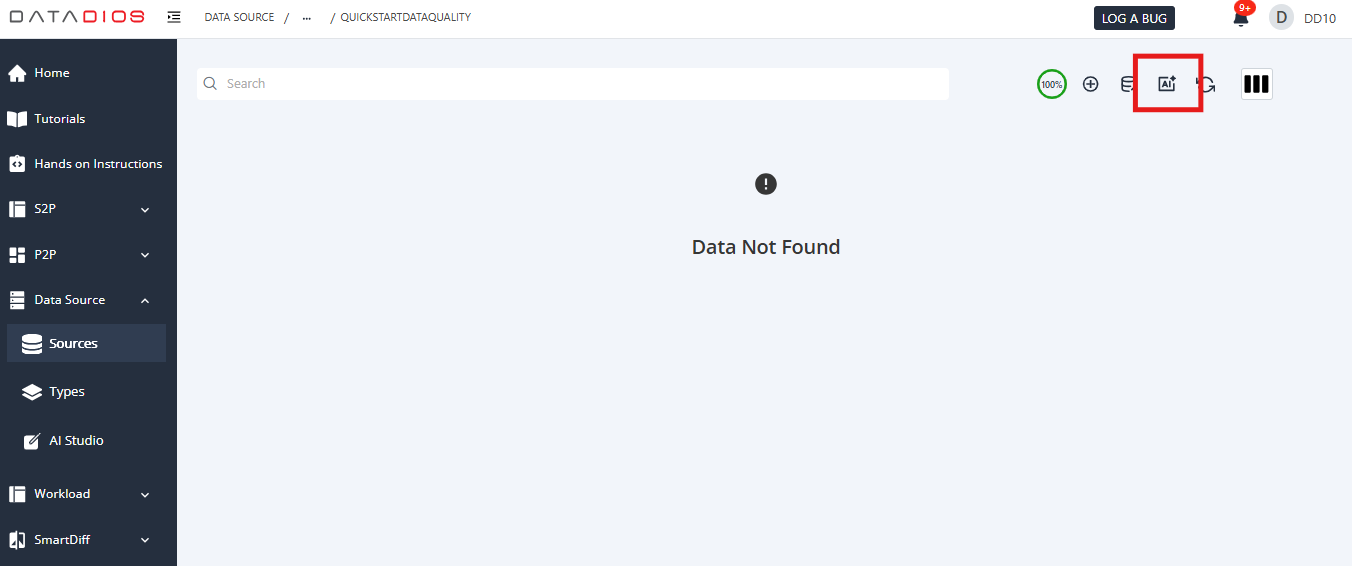

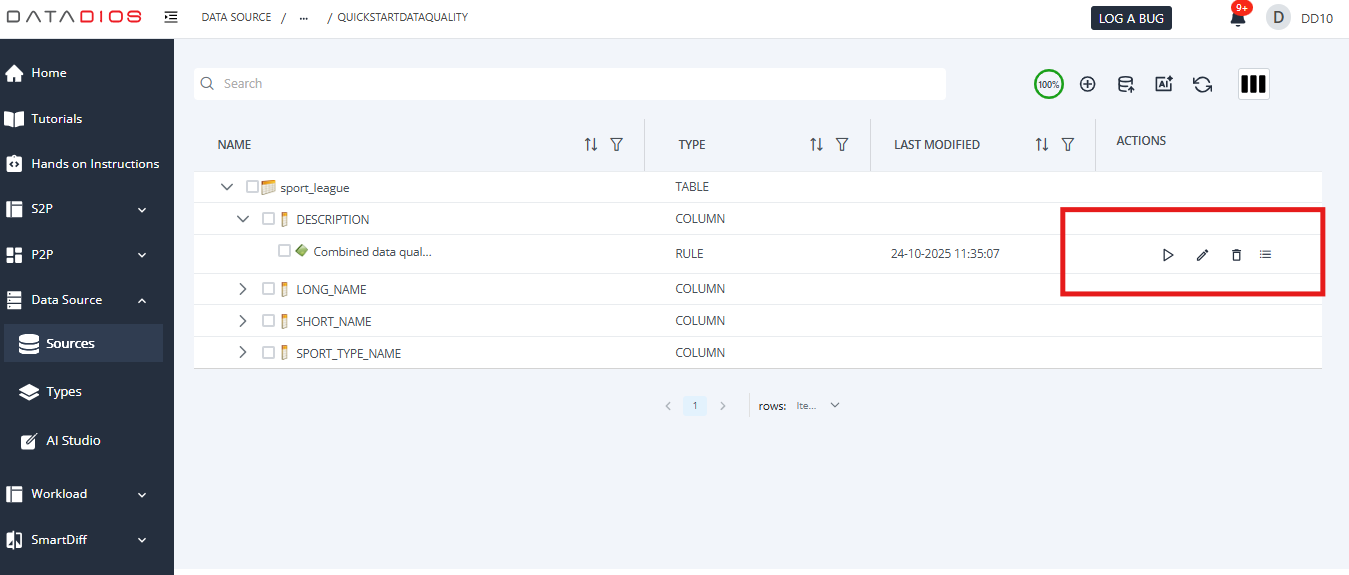

Step 6: Review Generated Rules

-

Navigate to DQ Hub

- Go to

Data Source → Sources - Expand your target DQ Hub

- Navigate through the hierarchy: Domain → Schema → Table → Column

- Go to

-

Examine Rule Details

- Review generated rule types (NOT_NULL, UNIQUE, RANGE, etc.)

- Check rule descriptions and SQL logic

- Verify severity levels (Low, Medium, High)

- Review scoring types and points

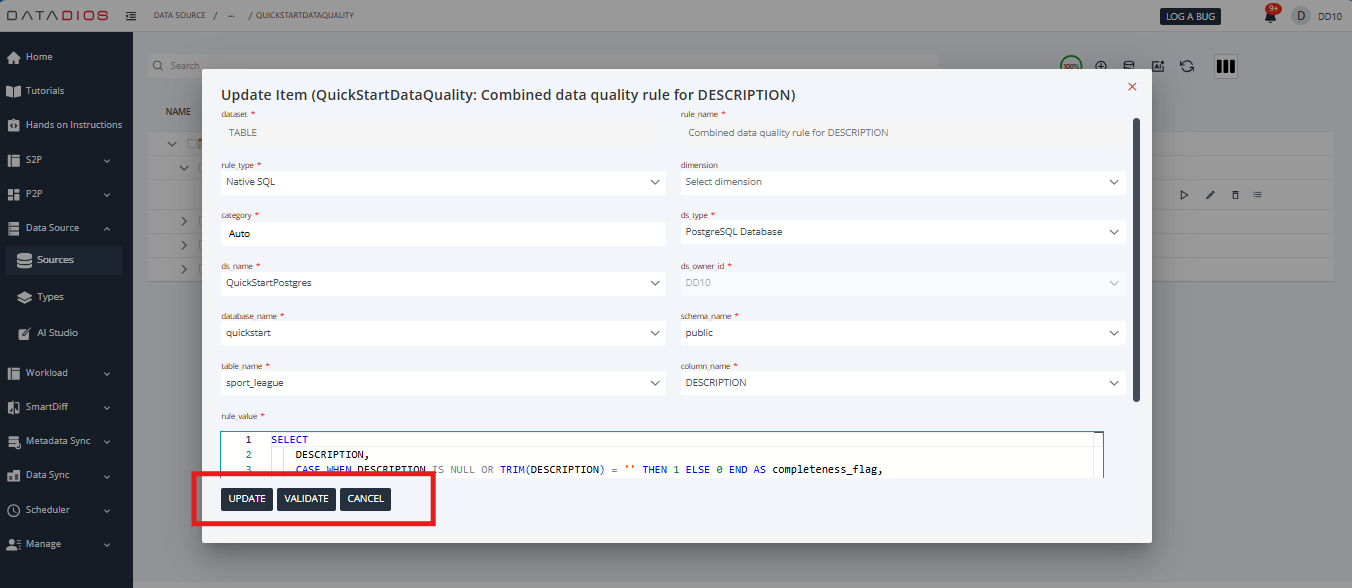

Step 7: Customize and Execute Rules

-

Customize Rules as Needed

- Click Edit on any rule to modify it

- Adjust severity levels based on business impact

- Modify scoring types and point values

- Update descriptions for clarity

- Add or modify rule logic if needed

-

Execute Rules

- Select individual rules or all rules for a table

- Click Run Now to trigger immediate execution

- Monitor execution progress

-

View Results

- Navigate to Rule Details → Run Details

- Review validation results

- Analyze failed records

- Track historical trends

Best Practices

1. Object Selection

Do:

- Start small with 5-10 critical tables before scaling

- Prioritize business-critical and compliance-required tables

- Group related tables together for consistent rule generation

- Focus on tables with high data quality impact

Don't:

- Include all tables at once in initial runs

- Generate rules for temporary or staging tables

- Overlook dependencies between related tables

- Skip validation of source data accessibility

2. Scheduling

Timing Considerations:

- Schedule during off-peak hours to minimize system load

- Monitor resource usage after scheduling

- Adjust frequency based on data change patterns

- Coordinate with other scheduled jobs

Frequency Selection:

- Daily: Transactional tables, customer data, order processing

- Weekly: Analytical tables, aggregated data, reports

- Monthly: Master data, reference tables, configuration data

3. Rule Management

After Generation:

- Always review and validate generated rules before production use

- Test rules on sample data to verify behavior

- Customize rule parameters to match business requirements

- Document any modifications to AI-generated rules

- Set appropriate severity levels based on business impact

Ongoing Maintenance:

- Periodically review rule effectiveness (monthly or quarterly)

- Update rules when schema changes occur

- Archive or delete obsolete rules

- Monitor rule execution performance and optimize slow queries

- Keep rule descriptions up-to-date

Troubleshooting

Issue 1: Rule Generation Fails

Symptoms:

- Error message displayed during generation

- No rules created in target DQ Hub

- Process hangs or times out

Possible Causes:

- Source data source connectivity issues

- Insufficient permissions

- AI service unavailability

- Invalid schema or table names

Solutions:

- Verify source data source connection status using TEST CONNECTION

- Check user permissions on both source and target data sources

- Validate schema and table names exist in the source database

- Contact system administrator to verify AI service availability

- Review error messages for specific details

Issue 2: Generated Rules Not Appearing

Symptoms:

- Generation completes successfully but rules not visible

- Rules not showing in DQ Hub interface

- Empty rule list in target DQ Hub

Solutions:

- Refresh the DQ Hub interface (click refresh icon or reload page)

- Clear browser cache and reload

- Verify user permissions on target DQ Hub

- Wait a few moments for cache synchronization

- Expand the correct schema and table in the hierarchy

- Check if rules were imported to a different DQ Hub

Issue 3: Incomplete Rule Generation

Symptoms:

- Some tables have rules generated, others don't

- Partial failure in batch processing

- Fewer rules than expected

Diagnosis Steps:

- Check if metadata exists for all selected objects

- Verify all tables are accessible

- Review source database permissions

Solutions:

- Retry with smaller batches of tables (2-3 tables at a time)

- Verify metadata exists for all selected tables

- Check table accessibility and permissions

- Review source database user privileges

- Ensure tables are not empty (AI needs sample data for rule generation)

- Check for special characters in table or schema names

Issue 4: Schedule Not Working

Symptoms:

- Scheduling enabled but rules not synchronized periodically

- No automatic rule updates occurring

- Schedule not showing in workflow list

Solutions:

- Verify scheduling was enabled during rule generation

- Check scheduler service is running (contact system administrator)

- Ensure user has scheduling permissions

- Verify no conflicting schedules exist for the same data sources

- Review schedule configuration (frequency, time, etc.)

- Check scheduler logs for errors

Issue 5: Incorrect or Unexpected Rules

Symptoms:

- Generated rules don't match expected patterns

- Too many or too few rules per table

- Rule logic seems incorrect

Solutions:

- Review table metadata (data types, constraints, indexes)

- Check if table has sufficient sample data for AI analysis

- Verify column names and data types are standard

- Customize generated rules to match business requirements

- Delete unwanted rules and create manual rules if needed

- Provide feedback to system administrator for AI model improvements

Appendix

A. Supported Data Source Types

| Data Source Type | DQ Rule Generation Support | Notes |

|---|---|---|

| MySQL | Yes | Full support for all rule types |

| PostgreSQL | Yes | Full support for all rule types |

| SQL Server | Yes | Full support for all rule types |

B. Generated Rule Types

The AI engine can generate the following rule types:

| Rule Type | Description | Example Use Case |

|---|---|---|

| NOT_NULL | Validates non-null values | Email addresses, customer IDs |

| RANGE | Validates numeric ranges | Age (0-120), Percentage (0-100) |

| FORMAT | Validates string formats | Email format, phone number format |

| PATTERN | Regex pattern matching | ZIP codes, custom ID patterns |

| DATA_TYPE | Data type validation | Ensures consistent data types |

| LENGTH | String length validation | ZIP code length = 5, State code = 2 |

The AI engine analyzes metadata including data types, constraints, indexes, and sample data to determine the most appropriate rule types for each column.

C. Schedule Intervals

| Schedule Frequency | Interval | Description |

|---|---|---|

| Daily | Every 24 hours | Executes once per day at specified time |

| Weekly | Every 7 days | Executes once per week on specified day |

| Monthly | Every 30 days | Executes on first day of each month |

Related Documentation

- Data Quality Overview - Manual DQ rule creation

- Data Sources - Data source configuration

- Scheduling - Schedule management